A Wary Wander into ChatGPT, with Opt-Out Instructions

My child and I are sitting on the third floor of McGill’s Redpath library, surrounded by books about art and architecture. Today, we’ve decided to attempt a foray into ChatGPT, one that we’re going to try to do in a way that does as little harm as possible.

It is 11:16am in Montreal, and we begin by navigating our browser to https://chat.openai.com/auth/login

Against a simple black background, we are presented with just this message: “Welcome to ChatGPT” and then “Log in with your OpenAI account to continue.” We can either “Log in” or “Sign up.” I guess we have to sign up. (I have already previously clicked on the very small “Terms of use” and “Privacy policy” links at the very bottom of the page.)

I am asked to give my name and my birthday. I could lie, but I have already given them my real e-mail address in order to log in. So I don’t see the point in subterfuge. It asks for my age (it claims this is only to verify that I am 13 years or older, but the child and I are skeptical). The page also informs me that by clicking “continue” I am agreeing to the terms and privacy policy.

I click.

Now it demands I also give my phone number, so that it can send me a code. My cell tells me 033347, which the child enters for me, giving me a hard time for not remembering it immediately. Kids these days.

We’re in, and the systems informs us that this is a ‘free research preview.” We’re guinea pigs and they want us to help them “improve [their] systems and make them safer.” There’s a disclaimer too. It says “While we have safeguards in place, the system may occasionally generate incorrect or misleading information and produce offensive or biased content. It is not intended to give advice.” So, I guess I won’t ask it for advice.

We click “next.”

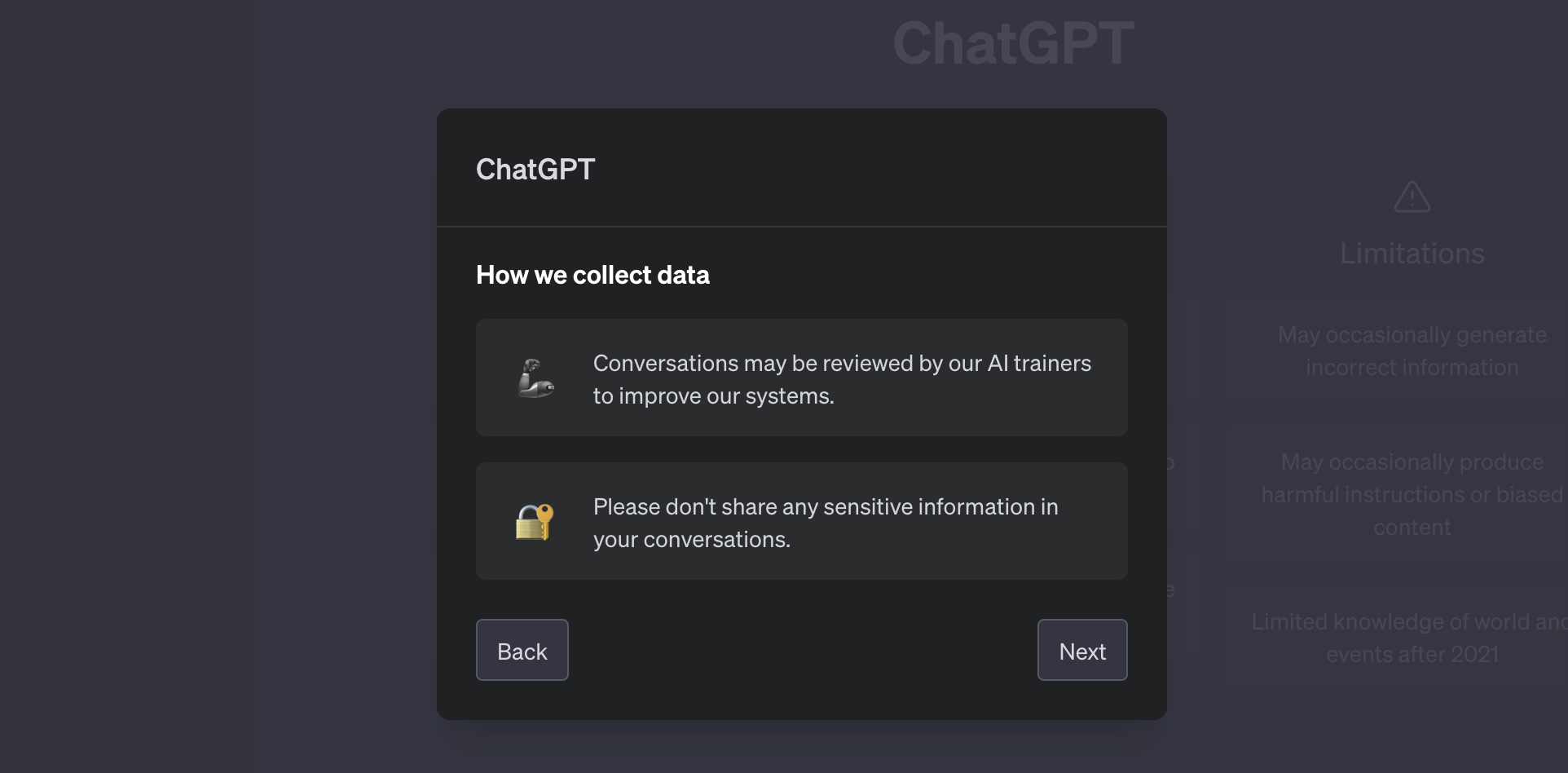

This screen is labeled “How we collect data.” It says that “AI trainers” might be looking at these conversations: the icon here is a little metal robotic arm, with an undeniable bicep. So the anthropomorphizing is strong here. It warns us not to “share any sensitive information,” which seems like good advice on the internet generally. This screen doesn’t inform me that I can Opt-Out of data collection.

“Next.”

They want us to grade the responses and let us know if they’re helpful. There’s a discord server. Nothing about opting out.

“Done.”

The introductory page gives us three examples, it lists three “capabilities” (memory of conversation, follow-up queries, and guardrails), and then three “limitations.” What we learn from ChatGPT-3.5 maybe incorrect, harmful, biased, and with limited knowledge anything after 2021. Okay.

As the child points out, they’re attempting the up-sell already. We have access to 3.5. The version GPT-4 has a lock icon. Another section that says “upgrade to plus” informs us that for $20 a month, we can get access to GPT-4.

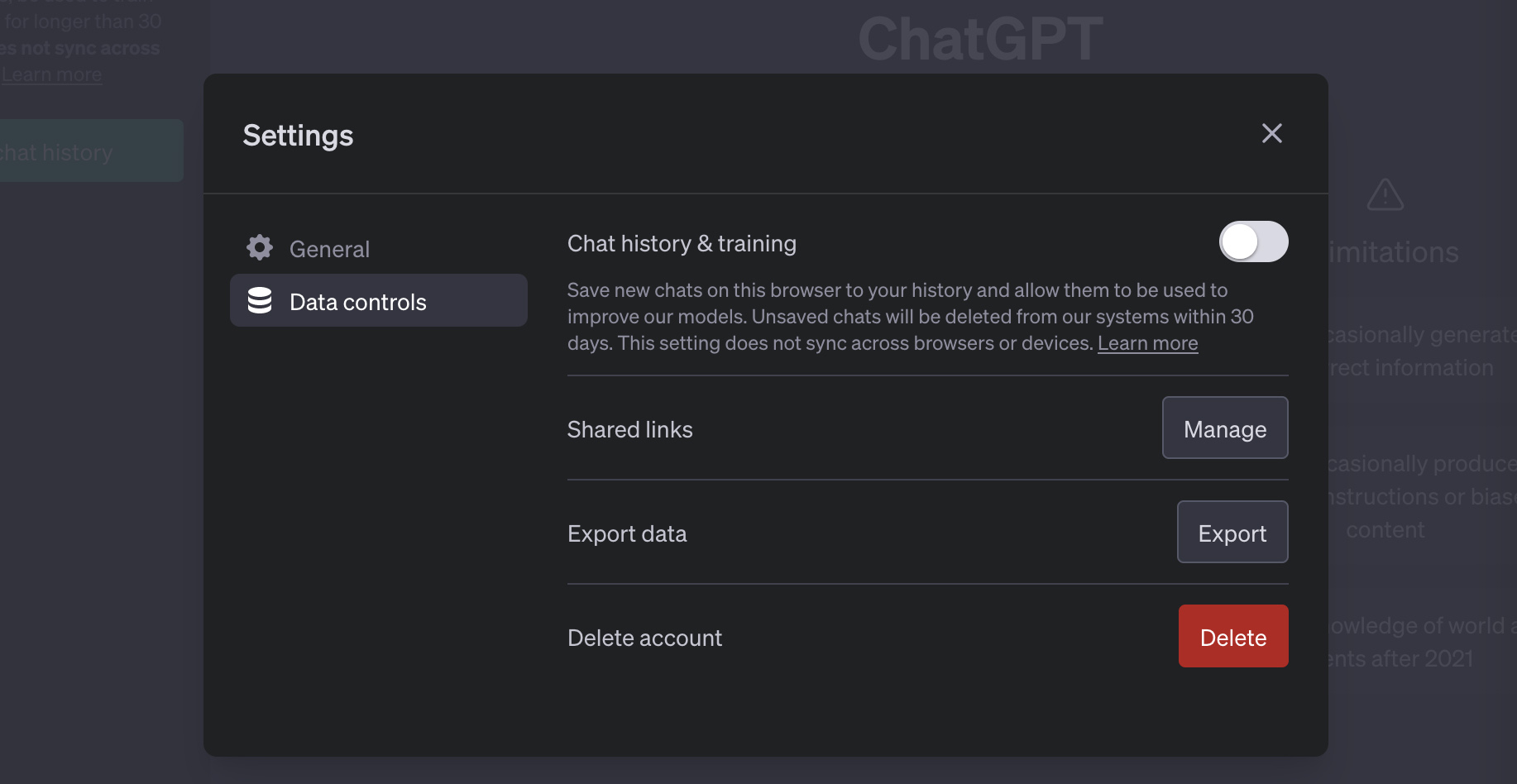

I scope out the settings before we ask any questions. I’m still looking for any mention of opting out. The first option in settings is “General” and I can choose a theme or I can “clear all chats,” which erases the chats from our session, but not from the company’s logs.

Under the next tab, “Data controls” we see a prominent option labeled ‘Chat history & training.’ This looks like the opt-out option. If we leave the switch, by default on, then we’re agreeing to “save new chats on this browser to your history and allow them to be used to improve our models.” If we flip the switch, which I do, we’re warned that “Unsaved chats will be deleted from our systems within 30 days.” It is not clear if this is opting out.

I have dug through the OpenAI website, following breadcrumbs into the company blog, to an entry called “How your data is used to improve model performance.” It says this:

“You can switch off training in ChatGPT settings (under Data Controls) to turn off training for any conversations created while training is disabled or you can submit this form. Once you opt out, new conversations will not be used to train our models.”

Just to be safe, we’re filling out the form too.

The opt-out form is doing its best to convince me to let them use my data. This is about “continuous improvement” of the system. And they are assuring my privacy: they will “remove any personally identifiable information from data we intend to use to improve model performance.” But that doesn’t help if the thing I don’t want to do is help them make their system more powerful.

Finally, they warn me that by not allowing training, it “will limit the ability of our models to better address your specific use case.” I can live with that.

We fill out the form. It involves clicking over to get an “organizational ID” for our “personal account.” We are told that our opt-out has been processed, but then there’s nothing else. The google form sent me a copy of my opt-out request. I don’t get any other obvious signal that it worked…